Parsing Expression Grammars, part 3

We build on the parsers from part 2 of this series to enhance and extend their capability. In particular, we extend the concept of modular grammars to construct chains of parsers which define a multi-stage transformation pipeline. The parsers forming these chain are enhanced to match and transform tree-structures, rather than being limited to simple sequences. Since the protocol used by all of the parsers is the same, we can easily substitute specialized implementations optimized for use in particular contexts.

Specialized Optimization

Frequently used patterns are worth considering for optimization based on specialization. The previous implementations of rep_ptrn_beh and many_ptrn_beh are defined by expansion into more primitive patterns. By doing the expansion ourselves, and then refactoring, we arrive at more compact and performant implementations.

Repeat Pattern

This pattern matches zero-to-many occurances of a pattern. The accumulated result is a NIL-terminated list of matches.

LET rep_ptrn_beh(ptrn) = \((ok, _), in, (_, env)).[ LET repeat = $SELF SEND ((ok', fail'), in, (?, env)) TO ptrn CREATE ok' WITH \(in', (acc', env')).[ SEND ((ok'', fail'), in', (?, env')) TO repeat CREATE ok'' WITH \(in'', (acc'', env'')).[ SEND (in'', (acc', acc''), env'') TO ok ] ] CREATE fail' WITH \_.[ SEND (in, (NIL, env)) TO ok ] ]

Notice how this implementation avoids binding and passing useless information. For example, this pattern can’t fail, so the original fail continuation is not bound, only the ok continuation is bound. Unused message values are matched with the _ wildcard and irrelevant values are sent as ? undefined. The accumulated result list is built explicitly by the ok’, ok” and fail’ continuation actors. The recursive reference to SELF is captured in the repeat variable, referenced from the ok’ continuation actor.

Many Pattern

This pattern matches one-or-more occurances of a pattern. The accumulated result is a NIL-terminated list of matches.

LET many_ptrn_beh(ptrn) = \((ok, fail), in, (_, env)).[ SEND ((ok', fail), in, (?, env)) TO ptrn CREATE ok' WITH \(in', (acc', env')).[ CREATE repeat WITH rep_ptrn_beh(ptrn) SEND ((ok'', fail), in', (?, env')) TO repeat CREATE ok'' WITH \(in'', (acc'', env'')).[ SEND (in'', (acc', acc''), env'') TO ok ] ] ]

This implementation follows the same general strategy as rep_ptrn_beh, and also creates a repeat actor with that behavior to handle the repeating tail of the pattern once the first occurance is matched. The fail continuation is used only if the first occurance of the pattern fails to match.

Transform Expression

A transform expression applies a function to the accumulated value without changing the environment in a semantic value. The implementation reads more clearly than the explanation.

LET xform_expr(func) = \(acc, env).(func(acc), env) LET xform_ptrn_beh(func) = expr_ptrn_beh(xform_expr(func))

The xform_expr creates a semantic action which applies a transformation function func to the accumulator acc.

The xform_ptrn_beh expands to an expr_ptrn_beh which applies the semantic action created by xform_expr.

We will use the xform_ptrn_beh to convert tuples of matched characters into primitive symbols and numbers. The built-in functions tuple_to_symbol and tuple_to_number perform the actual conversion for us.

LISP/Scheme Grammar

With this machinery in place, we define a grammar for parsing languages in the LISP/Scheme family (based on [1]).

# sexpr = _ (list | atom)

# list = '(' sexpr* :s _ ')' $s

# atom = (number | symbol)

# number = [0-9]+ $#

# symbol = [a-zA-Z0-9]+ $

# _ = [ \t-\r]*

CREATE __ptrn WITH rep_ptrn_beh(

NEW set_ptrn_beh(32, (9, 13), NIL)

)

CREATE symbol_ptrn WITH and_ptrn_beh(

NEW many_ptrn_beh(

NEW set_ptrn_beh((97, 122), (65, 90), (48, 57), NIL)

),

NEW xform_ptrn_beh(\x.tuple_to_symbol(x))

)

CREATE number_ptrn WITH and_ptrn_beh(

NEW many_ptrn_beh(

NEW set_ptrn_beh((48, 57), NIL)

),

NEW xform_ptrn_beh(\x.tuple_to_number(10, x))

)

CREATE atom_ptrn WITH or_ptrn_beh(

number_ptrn,

symbol_ptrn

)

CREATE list_ptrn WITH \msg.[

BECOME scope_ptrn_beh(

NEW seq_ptrn_beh(

NEW one_ptrn_beh(40),

NEW rep_ptrn_beh(sexpr_ptrn),

NEW bind_ptrn_beh(#s),

__ptrn,

NEW one_ptrn_beh(41),

NEW expr_ptrn_beh(lookup_expr(#s))

)

)

SEND msg TO SELF

]

CREATE sexpr_ptrn WITH and_ptrn_beh(

__ptrn,

NEW or_ptrn_beh(

list_ptrn,

atom_ptrn

)

)

Sending the following message:

# "(CAR ( LIST 0 1)\t)" SEND NEW tuple_stream_beh( 40, 67, 65, 82, 32, 40, 32, 76, 73, 83, 84, 32, 48, 32, 49, 41, 9, 41, NIL ) TO NEW start_beh((ok, fail), sexpr_ptrn)

should produce this output:

#ok, <Actor: id=@stream_end>, (#CAR, (#LIST, 0, 1, NIL), NIL), NIL

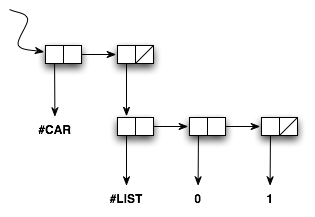

The entire input stream is matched successfully. The accumulator of the resulting semantic value contains the expected list structure, as shown in Figure 1.

Parser Chain

So far, our parsers have all processed streams (sequences) of symbols. The accumulated semantic value has often been a parse-tree (sequence of sequences). We can extend the applications of our parser machinery by chaining parsers together so that the output of one parser becomes the input to the next. In order to support this use, we must be able to match the tree structures (nested sequences) generated by previous parsers. The tuple_ptrn_beh will match these nested sequences. But first, we examine one more sequence-generating pattern.

All Pattern

This pattern matches a sequence of literal symbols. The accumulated result is the sequence of symbols matched. It is defined by recursive expansion into instances of seq_ptrn_beh, one_ptrn_beh and zero_ptrn_beh, with an expr_ptrn_beh to capture the accumulated match.

LET all_ptrn_beh(match) = ( CASE match OF NIL : zero_ptrn_beh (first, rest) : seq_ptrn_beh( NEW one_ptrn_beh(first), NEW all_ptrn_beh(rest), NEW expr_ptrn_beh(\(_, env).( match, env )) ) last : one_ptrn_beh(match) END )

We can demonstrate this pattern by defining a grammar for the keywords SEND, CREATE and BECOME.

CREATE keyword_ptrn WITH alt_ptrn_beh( NEW all_ptrn_beh(83, 69, 78, 68), # SEND NEW all_ptrn_beh(67, 82, 69, 65, 84, 69), # CREATE NEW all_ptrn_beh(66, 69, 67, 79, 77, 69) # BECOME )

Sending the following message:

# "SEND" SEND NEW tuple_stream_beh(83, 69, 78, 68, NIL) TO NEW start_beh((ok, fail), keyword_ptrn)

shoud produce this output:

#ok, <Actor: id=@stream_end>, (83, 69, 78, 68), NIL

The input matches the SEND keyword. The accumulator value is a tuple of the matched characters.

Tuple Pattern

This pattern is the key to processing trees rather than flat sequences. A tuple pattern treats a single input token as a nested sequence and matches it against a given pattern. If the pattern matches the entire nested sequence, then the tuple pattern matches, consuming the input token. Otherwise, the tuple pattern fails, consuming no input.

LET tuple_ptrn_beh(ptrn) = \((ok, fail), in, value).[ CASE in OF (token, next) : [ SEND NEW tuple_stream_beh(token) TO NEW start_beh((ok', fail'), ptrn) CREATE ok' WITH \(sub', value').[ CASE sub' OF $stream_end : [ SEND (SELF, #read) TO next BECOME \in'.[ SEND (in', value') TO ok ] ] _ : [ SEND (in, value) TO fail ] END ] CREATE fail' WITH \(sub', value').[ SEND (in, value) TO fail ] ] _ : [ SEND (in, value) TO fail ] END ]

If the current input in contains a token, then that token is used to produce a new tuple_stream_beh and start_beh initiates matching the pattern ptrn. If the pattern matches, we ensure that there is no left-over input in the nested stream before consuming the original input token and indicating success.

Token Streams

A token stream is used to chain parsers together so that the output of one parser becomes the input of another. A source stream is repeatedly matched against a top-level pattern. The result of each match becomes a token produced by the token stream, or stream_end if there are no more matches. This token stream can be provided as the input stream to another parser, establishing a link in the chain of parser transformations.

LET token_input_beh(ptrn, input) = \(cust, #read).[ SEND ((ok, fail), input, (?, init_env)) TO ptrn CREATE ok WITH \(input', (token, _)).[ CREATE next WITH token_input_beh(ptrn, input') SEND (token, next) TO cust ] CREATE fail WITH \_.[ SEND stream_end TO cust ] ] LET token_stream_beh(ptrn, stream) = \(cust, #read).[ SEND (k_read, #read) TO stream CREATE k_read WITH \input.[ SEND (cust, #read) TO NEW token_input_beh(ptrn, input) ] ]

Our stream protocol is pull-based. A #read request on the stream initiates the processing necessary to produce the next token, or stream_end to indicate that no more input is available. Our parser protocol expects to be given an input which is either stream_end or a pair consisting of a token and the next input position. The token_stream_beh and token_input_beh provide an adapter between the expectations of the stream protocol and the parser protocol.

Summary

We now have quite an extensive toolkit of parsers and semantic actions. By chaining together a series of modular parsers, we can create powerful flexible transformation pipelines. The components in the pipeline can be reused and recombined to implement a wide variety of processes, from lexical analysis and parse-tree generation, to structural optimization and code generation. One key to this flexibility is the ability to match tree-structured, rather than simply sequential, input. This allows us to use the same tools for multiple stages in the recognition and transformation process.

References

- [1]

- I. Piumarta. PEG-based Transformer Provides Front-, Middle- and Back-end Stages in a Simple Compiler, Workshop on Self-Sustaining Systems (S3), Tokyo, Japan, Sept 2010. ACM Digital Library

Tags: actor, composition, grammar, pattern-matching, PEG, protocol, sequence, streams

[…] implementation can be further optimized, as we did with the Repeat Pattern in part 3, by expanding the Star Pattern definition and refactoring, like […]